Setting up Elasticsearch, Logstash, Kibana (ELK Stack) and Filebeat on Ubuntu 14.04 without SSL

01/07/2017 - ELASTICSEARCH, LINUX

In this example we are going to setup Elasticsearch Logstash Kibana (ELK stack) and Filebeat on Ubuntu 14.04 server without using SSL. For the testing purposes, we will configure Filebeat to watch regular Apache access logs on WEB server and forward them to Logstash on ELK server. You can add more configurations to watch other logs such as your website logs, syslog etc.

Flow

This is how the system works.

ELK server

Our ELK stack setup has three components as shown below.

- Logstash: Used to process incoming logs. Version 2.3.4

- Elasticsearch: Stores the logs. Version 2.4.5

- Kibana: Web GUI for searching and visualising logs. Version 4.5.4

Additionally we have one server component as shown below. Our server's IP is 192.168.50.40.

- Java 8 version 1.8.0_131

WEB server

Our WEB server setup has one component as shown below.

- Filebeat: Used to read and forward logs from client servers to remote ELK server. Version 5.4.0

Additionally we have two server components as shown below. Our server's IP is 192.168.50.50.

- Apache2 version 2.4.7

- Java 8 version 1.8.0_131

If the client server goes down, Filebeat is capable of remembering the location of where it left off when everything is back online. It can be configured to send logs to either Elasticsearch or Logstash.

ELK Server

Operating system

Updating repository database.

$ sudo apt-get -y update

Java

Adding repository source.

$ sudo add-apt-repository -y ppa:webupd8team/java

Updating repository database.

$ sudo apt-get -y update

Install.

$ sudo apt-get -y install oracle-java8-installer

Elasticsearch

Importing public key.

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Creating source list.

$ echo "deb http://packages.elastic.co/elasticsearch/2.x/debian stable main" | sudo tee -a /etc/apt/sources.list.d/elasticsearch-2.x.list

Updating repository database.

$ sudo apt-get -y update

Installing.

$ sudo apt-get -y install elasticsearch

Configuration.

$ sudo nano /etc/elasticsearch/elasticsearch.yml

cluster.name: elk-cluster

node.name: elk-node

bootstrap.memory_lock: true

network.host: localhost

http.port: 9200

Starting.

$ sudo service elasticsearch start

Testing.

$ curl localhost:9200

{

"name" : "elk-node",

"cluster_name" : "elk-cluster",

"cluster_uuid" : "Ocu_bS3jQ_G8rpAHVuRgBw",

"version" : {

"number" : "2.4.5",

"build_hash" : "c849dd13904f53e63e88efc33b2ceeda0b6a1276",

"build_timestamp" : "2017-04-24T16:18:17Z",

"build_snapshot" : false,

"lucene_version" : "5.5.4"

},

"tagline" : "You Know, for Search"

}

Check current indexes. Currently there is no index yet.

$ curl localhost:9200/_cat/indices

Verify listening IP and port.

$ netstat -pltn

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp6 0 0 127.0.0.1:9200 :::* LISTEN -

Kibana

Creating source list.

$ echo "deb http://packages.elastic.co/kibana/4.5/debian stable main" | sudo tee -a /etc/apt/sources.list

Updating repository database.

$ sudo apt-get -y update

Installing.

$ sudo apt-get -y install kibana

Configuration.

$ sudo nano /opt/kibana/config/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

Restart.

$ sudo service kibana start

Verify listening IP and port.

$ netstat -pltn

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN -

Check current indexes. As you can see below, default "kibana" index has been created.

$ curl localhost:9200/_cat/indices

yellow open .kibana 1 1 1 0 3kb 3kb

You should be able to access GUI via http://192.168.50.40:5601 from your browser. However, there won't be any index pattern available at this stage yet.

Download Filebeat index patterns so that we can use them in Kibana GUI.

$ curl -L -O https://download.elastic.co/beats/dashboards/beats-dashboards-1.2.2.zip

$ sudo apt-get -y install unzip

$ unzip beats-dashboards-1.2.2.zip

Load Filebeat index patterns into Elasticsearch. Process below will load "packetbeat-*", "topbeat-*", "filebeat-*" and "winlogbeat-*" index patterns to Kibana GUI. You can see them on the left hand side of the menu.

$ cd beats-dashboards-1.2.2

$ ./load.sh

Load Filebeat index template so that it will configure Elasticsearch to analyse incoming Filebeat fields.

$ curl -O https://gist.githubusercontent.com/thisismitch/3429023e8438cc25b86c/raw/d8c479e2a1adcea8b1fe86570e42abab0f10f364/filebeat-index-template.json

Load the template.

$ curl -XPUT "http://localhost:9200/_template/filebeat?pretty" -d@filebeat-index-template.json

{

"acknowledged" : true

}

Logstash

Creating source list.

$ echo "deb http://packages.elastic.co/logstash/2.3/debian stable main" | sudo tee -a /etc/apt/sources.list

Updating repository database.

$ sudo apt-get -y update

Installing.

$ sudo apt-get -y install logstash

Configuration below will print logs to terminal instead of elasticsearch for testing purposes.

$ sudo nano /etc/logstash/conf.d/web-apache-access.conf

input {

beats {

port => 5044

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

sniffing => true

manage_template => false

index => "web-apache-access"

#document_type => "apache_logs" # This is unnecessary

}

}

The grok regex COMBINEDAPACHELOG for Apache logs looks like below. You can see in grok-patterns page.

COMMONAPACHELOG %{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

COMBINEDAPACHELOG %{COMMONAPACHELOG} %{QS:referrer} %{QS:agent}

As you can see above, it creates fields below in elasticsearch index so you can use them in Kibana to create graphics.

clientip

ident

auth

timestamp

verb

request

httpversion

rawrequest

response

bytes

referrer

agent

Validating configuration file takes about 10 seconds to get confirmation.

$ sudo /opt/logstash/bin/logstash --configtest -f /etc/logstash/conf.d/web-apache-access.conf

Configuration OK

Starting.

$ sudo service logstash start

Verify listening IP and port.

$ netstat -pltn

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp6 0 0 :::5044 :::* LISTEN -

Open TCP 5044 port by appending the rule to the end of INPUT chain of iptables. This will allow TCP access to port 5044 from outside.

$ sudo iptables -A INPUT -p tcp --dport 5044 -j ACCEPT

As you can see, the TCP port 5044 has been opened but this is temporary so we will make this permanent later on.

$ sudo iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

ACCEPT tcp -- anywhere anywhere tcp dpt:5044

Install iptables-persistent to make new iptables rule permanent.

$ sudo apt-get install iptables-persistent

Save new iptables rules.

$ sudo invoke-rc.d iptables-persistent save

* Saving rules...

* IPv4...

* IPv6...

Check current indexes. As you can see below, there is nothing apart from default "kibana" index.

$ curl localhost:9200/_cat/indices

yellow open .kibana 1 1 1 0 3kb 3kb

WEB Server

ELK server port access

As you can see below, our ELK server is ready for accepting incoming traffic from our WEB server on port 5044.

$ telnet 192.168.50.40 5044

Trying 192.168.50.40...

Connected to 192.168.50.40.

Escape character is '^]'.

Filebeat

Creating source list.

$ echo "deb https://packages.elastic.co/beats/apt stable main" | sudo tee -a /etc/apt/sources.list.d/beats.list

Importing public key.

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

Updating repository database.

$ sudo apt-get -y update

Install.

$ sudo apt-get -y install filebeat

Configuring to forward logs to Logstash on ELK server.

$ sudo nano /etc/filebeat/filebeat.yml

filebeat:

prospectors:

paths:

- /var/log/apache2/access.log

input_type: log

output:

# elasticsearch:

# hosts: ["localhost:9200"]

logstash:

hosts: ["192.168.50.40:5044"]

As soon as we start Filebeat, relevant Elasticsearch index "web-apache-access" in ELK server should be created.

$ sudo service filebeat start

ELK Server

Index

As you can see below, Filebeat related "web-apache-access" index has been created.

$ curl localhost:9200/_cat/indices

yellow open .kibana 1 1 103 0 94.7kb 94.7kb

yellow open web-apache-access 5 1 4 0 25.5kb 25.5kb

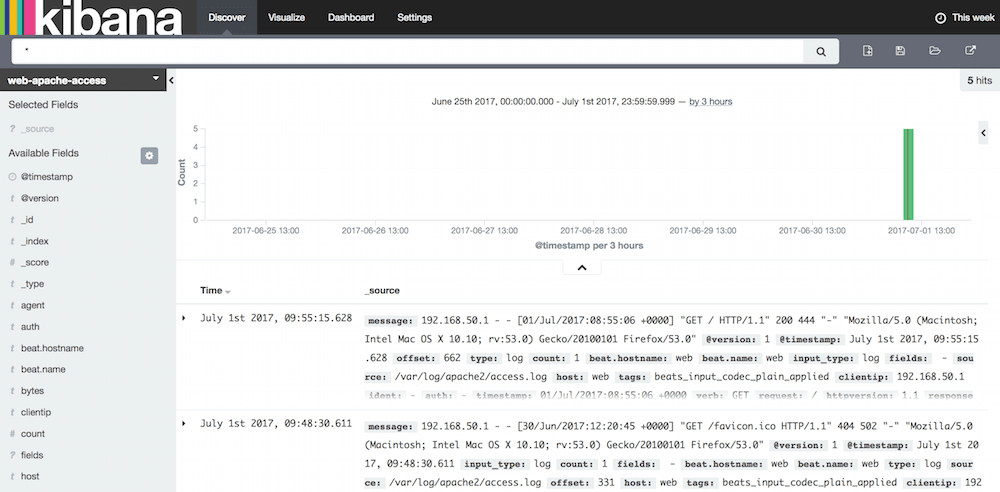

Kibana GUI setup

- Go to

http://192.168.50.40:5601to access Kibana GUI. - Type "web-apache-access" in "Index name or pattern" field.

- Wait for "Create" button to appear and hit it.

Elasticsearch index content

$ curl -XGET localhost:9200/web-apache-access/_search?pretty=1

{

"took": 4,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"failed": 0

},

"hits": {

"total": 5,

"max_score": 1.0,

"hits": [

{

"_index": "web-apache-access",

"_type": "apache_logs",

"_id": "AVz66LnCUo6EnAM7FtHn",

"_score": 1.0,

"_source": {

"message": "192.168.50.1 - - [30/Jun/2017:12:20:45 +0000] \"GET /favicon.ico HTTP/1.1\" 404 ...",

"@version": "1",

"@timestamp": "2017-07-01T08:48:30.610Z",

"offset": 160,

"input_type": "log",

"count": 1,

"fields": null,

"source": "/var/log/apache2/access.log",

"type": "log",

"beat": {

"hostname": "web",

"name": "web"

},

"host": "web",

"tags": [

"beats_input_codec_plain_applied"

],

"clientip": "192.168.50.1",

"ident": "-",

"auth": "-",

"timestamp": "30/Jun/2017:12:20:45 +0000",

"verb": "GET",

"request": "/favicon.ico",

"httpversion": "1.1",

"response": "404",

"bytes": "502",

"referrer": "\"-\"",

"agent": "\"Mozilla/5.0 (Macintosh; Intel Mac OS X 10.10; rv:53.0) Gecko/20100101 Firefox/53.0\""

}

},

{

"_index": "web-apache-access",

"_type": "apache_logs",

"_id": "AVz66LnCUo6EnAM7FtHp",

"_score": 1.0,

"_source": {

"message": "192.168.50.1 - - [01/Jul/2017:08:26:07 +0000] \"GET / HTTP/1.1\" 200 444 \"-\" \"Mozilla/5.0 ...",

"@version": "1",

"@timestamp": "2017-07-01T08:48:30.611Z",

"source": "/var/log/apache2/access.log",

"offset": 502,

"type": "log",

"input_type": "log",

"fields": null,

"count": 1,

"beat": {

"hostname": "web",

"name": "web"

},

"host": "web",

"tags": [

"beats_input_codec_plain_applied"

],

"clientip": "192.168.50.1",

"ident": "-",

"auth": "-",

"timestamp": "01/Jul/2017:08:26:07 +0000",

"verb": "GET",

"request": "/",

"httpversion": "1.1",

"response": "200",

"bytes": "444",

"referrer": "\"-\"",

"agent": "\"Mozilla/5.0 (Macintosh; Intel Mac OS X 10.10; rv:53.0) Gecko/20100101 Firefox/53.0\""

}

}

]

}

}

Elasticsearch index mapping

$ curl -X GET localhost:9200/_mapping/apache_logs?pretty

{

"web-apache-access": {

"mappings": {

"apache_logs": {

"properties": {

"@timestamp": {

"type": "date",

"format": "strict_date_optional_time||epoch_millis"

},

"@version": {

"type": "string"

},

"agent": {

"type": "string"

},

"auth": {

"type": "string"

},

"beat": {

"properties": {

"hostname": {

"type": "string"

},

"name": {

"type": "string"

}

}

},

"bytes": {

"type": "string"

},

"clientip": {

"type": "string"

},

"count": {

"type": "long"

},

"host": {

"type": "string"

},

"httpversion": {

"type": "string"

},

"ident": {

"type": "string"

},

"input_type": {

"type": "string"

},

"message": {

"type": "string"

},

"offset": {

"type": "long"

},

"referrer": {

"type": "string"

},

"request": {

"type": "string"

},

"response": {

"type": "string"

},

"source": {

"type": "string"

},

"tags": {

"type": "string"

},

"timestamp": {

"type": "string"

},

"type": {

"type": "string"

},

"verb": {

"type": "string"

}

}

}

}

}

}