Setting up an automated CI/CD pipeline with GitHub Actions, ArgoCD, Helm and Kubernetes

08/02/2022 - ARGOCD, DOCKER, GIT, GO, HELM, KUBERNETES

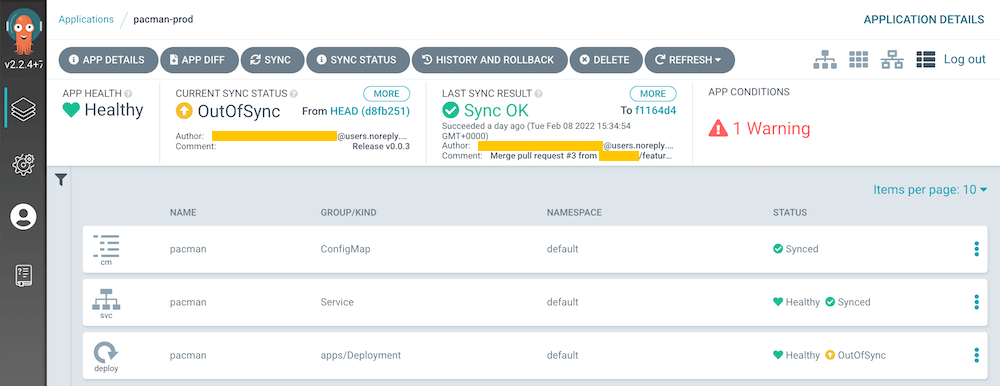

In this example we are going to implement a full CI/CD pipeline for one of our applications. For the CI (Continuous Integration), we will be using GitHub Actions and for the CD (Continuous Deployment/Delivery), we will be using ArgoCD. In order to make some steps dynamic and less repetitive, we will be using Helm Charts rather than plain Kubernetes manifests (nothing stops you from dumping Helm and sticking with plain K8S manifests though). Finally the application will be deployed to a few different Kubernetes clusters with different namespaces. Although this is for a polyrepo application, you can easily adapt it to monorepo as well. I must say, it would go very nicely with monorepo after moving the Helm Charts to config repository and applying some minor tweaks to other configurations.

We are going to create two GitHub repositories. One for the application where application code and the Helm Charts (I will explain why!) live. The other one is for the ArgoCD's declarative configuration files because we don't want to use UI to manually create many resources every single time a new application pops up (We will use argocd command).

Before I begin, I should make it clear that everyone would have different requirements and expectations so you are free to go your own way. Do not feel like you have to do the way I do in this example. You can make all stages either fully automatic (GitFlow branching would help a lot for this) or manual or like I do, partially automated/manual. You can also create a Kubernetes cluster per environment if you are willing to spend more money :) There are pros and cons for all options.

Kubernetes setup

You can add more clusters or namespaces if you wish.

CLUSTER NAMESPACE DESCRIPTION DEPLOYMENT STRATEGY IMAGE TAG

nonprod dev development env fully auto CI/CD latest

sbox sandbox env fully auto CI but manual CD semantic ver

prod default production env fully auto CI but manual CD semantic ver

CI/CD strategy

Here I explain how our whole setup will behave per each ArgoCD application entry. dev always auto deploys after HEAD/master changes in GitHub repository. sbox auto deploys only when a new release is created and image tag is added to Helm Chart file. prod never auto deploys even if a new release is created or image tag is added to Helm Chart file. By default, auto deployment takes place every three minutes which is ArgoCD's default. By the way, when I say "flow" below, I am talking about the "application" entry in ArgoCD UI which represents a specific application and its environment.

dev

ArgoCD "auto-sync" is enabled for auto deployment. ArgoCD watches the changes done to the HEAD/master in GitHub repository. If a change is detected and also if the digest of the latest image tag in DockerHub is different to what ArgoCD knows, auto deploy starts. The whole CI/CD is automated so no need for engineers to do anything manual for the deployment.

Kubernetes "force rollout" is enabled because the image tag we are using is always latest otherwise Pod restart wouldn't pull the latest image because it is already there. You don't want to create a new unique image tag every single time a new feature is introduced anyway.

Creating a new release in GitHub doesn't affect this flow.

sbox

ArgoCD "auto-sync" is enabled for auto deployment. ArgoCD watches the changes done to the HEAD/master in GitHub repository. If a new release is created and the .infra/helm/Chart.yaml:appVersion is different to what ArgoCD knows, auto deploy starts. This CD flow is partially automated so engineers need to create a pull request to add the new release tag to file mentioned. Once done, auto deploy kicks in.

Kubernetes "force rollout" is disabled because the image tag it watches is a semantic image tag version where any change will trigger image pull anyway.

Everything has been done for this flow to work is going to trigger dev flow as well because HEAD/master in GitHub repository is changed as well as the the digest of the latest image tag in DockerHub. prod flow will be put into "out of sync" state as the semantic image tag ArgoCD knowns is now outdated.

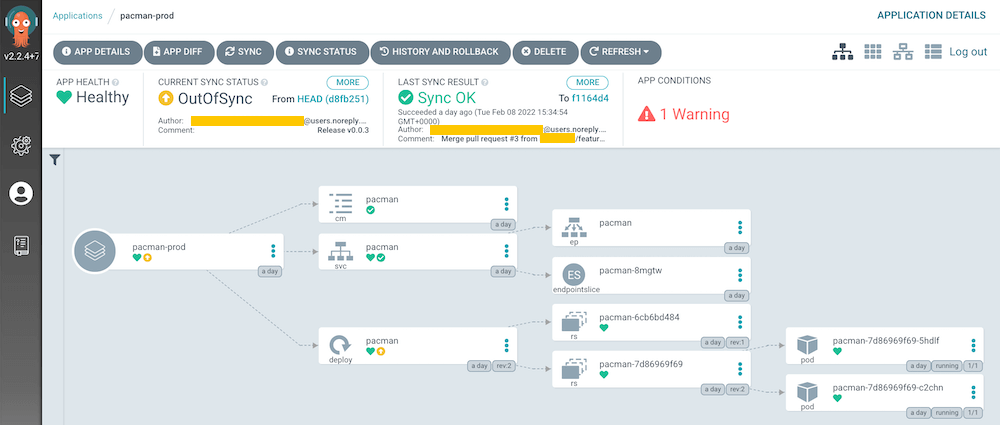

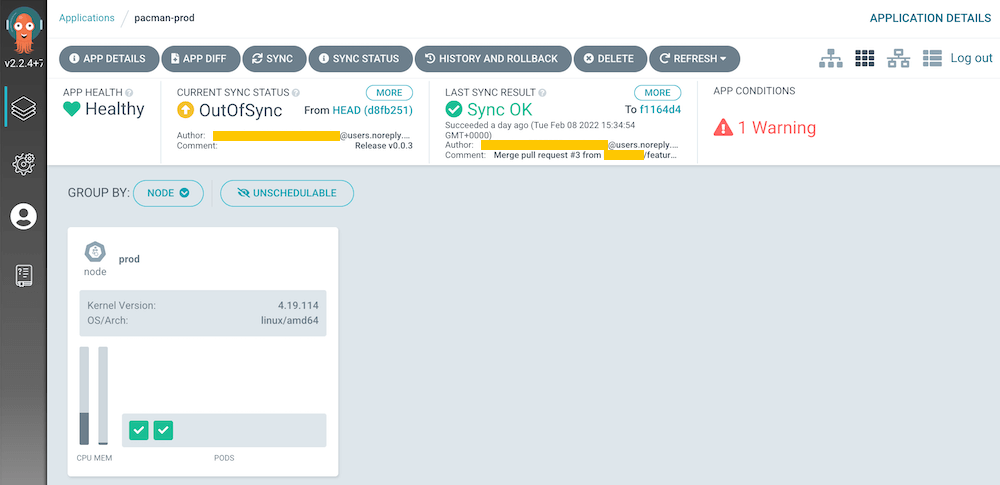

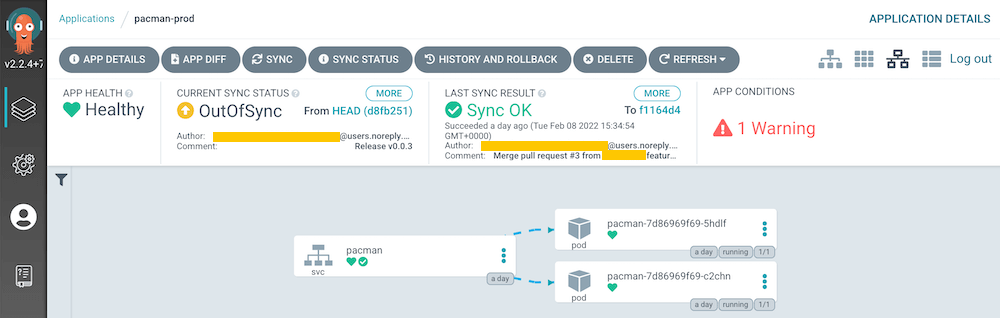

prod

ArgoCD "auto-sync" is disabled for auto deployment. However, if a new release is created and the .infra/helm/Chart.yaml:appVersion is different to what ArgoCD knows, this flow will be put into "out of sync" state which is when an engineer can click the "SYNC" icon to trigger deployment. As you can see, this CD flow is fully manual so engineers need to create a pull request to add the new release tag to file mentioned then click "SYNC" icon.

Kubernetes "force rollout" is disabled because the image tag it watches is a semantic image tag version where any change will trigger image pull anyway.

This flow won't affect dev flow because sbox flow has already managed it before coming to this stage.

Rollback

If you rollback a flow for any environment, it will stay as-is even if someone introduces a new change anywhere. The auto-sync option will not revert your rollback even if HEAD/master commit hash changes, a new release is created or Helm Chart file is updated. You will have to manually SYNC the flow to unlock it first which is a good thing because you don't want your rollbacks are being prematurely reverted.

Keeping Helm Charts in application repository

ArgoCD suggests that it is better to keep all manifests or configuration files in a separate repository (e.g. config) for many good reasons. However, it has one problem that beats the whole purpose of fully automated deployment pipeline. For instance, if you want to provide a full automated CD like I do for dev flow, you will have to manually update something in the config repository so that ArgoCD picks it up and triggers deployment. It is because, your configurations are set to watch the config repository, not the application repository anymore. This is not engineer friendly approach because as I said everytime you introduce something to your application repository, you will also have to do something in the config. Too much hassle! Having said all that, some people suggest a solution to avoid this manual operation. It is to push a commit to config repository within CI steps. I find this a bit hacky. What if CI step breaks because of a conflict! Also I don't like the idea of pulling a different repository and pushing a commit to it from within a CI pipeline that is for a different repository. I just don't like the sound of this solution. If you still want it, here is an example of mine.

Adopting ArgoCD's suggestion can be very useful if your application repository is setup as a monorepo because you might have hundreds of services in there. In such case, you will often want to deploy specific services, not all of them after every single change in the application repository. This would be crazy! So in short, see what you have and do what is best.

GitHub Actions's responsibility

There are three actions but only two of them directly affect ArgoCD which are "merge" and "release". You can read the detailed comments in the actual files below.

The "merge" action pushes a new docker image using the "latest" tag. This is for the dev CD flow. The "release" action pushes a new docker image using semantic image tag version that you have just created. This is for the sbox and prod CD flows.

Application repository

├── .github

│ └── workflows

│ ├── merge.yaml

│ ├── pull_request.yaml

│ └── release.yaml

├── .infra

│ ├── docker

│ │ └── Dockerfile

│ └── helm

│ ├── Chart.yaml

│ ├── dev.yaml

│ ├── prod.yaml

│ ├── sbox.yaml

│ └── templates

│ ├── configmap.yaml

│ ├── deployment.yaml

│ └── service.yaml

├── .dockerignore

├── main.go

└── main_test.go

Files

merge.yaml

# Trigger the workflow only when:

# - an existing pull request with any name/type is merged to the master or develop branch

# - a commit is directly pushed to the master or develop branch

name: Merge

on:

push:

branches:

- master

- develop

jobs:

setup:

runs-on: ubuntu-latest

outputs:

ver: ${{ steps.vars.outputs.ver }}

steps:

- name: Use repository

uses: actions/checkout@v2

- name: Build variables

id: vars

run: |

echo "::set-output name=ver::$(git rev-parse --short "$GITHUB_SHA")"

- name: Upload repository

uses: actions/upload-artifact@v2

with:

name: repository

path: |

${{ github.workspace }}/.infra/docker

${{ github.workspace }}/.dockerignore

${{ github.workspace }}/main.go

${{ github.workspace }}/main_test.go

${{ github.workspace }}/go.mod

${{ github.workspace }}/go.sum

test:

needs: setup

runs-on: ubuntu-latest

steps:

- name: Use Golang 1.17

uses: actions/setup-go@v2

with:

go-version: 1.17

- name: Download repository

uses: actions/download-artifact@v2

with:

name: repository

- name: Run tests

run: go test -v -race -timeout=180s -count=1 -cover ./...

docker:

needs: [setup, test]

runs-on: ubuntu-latest

steps:

- name: Download repository

uses: actions/download-artifact@v2

with:

name: repository

- name: Login to DockerHub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKERHUB_USER }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Build and push image

uses: docker/build-push-action@v2

with:

push: true

file: .infra/docker/Dockerfile

tags: ${{ github.repository }}:latest

build-args: VER=${{ needs.setup.outputs.ver }}

pull_request.yaml

# Trigger the workflow only when:

# - a new pull request with any name/type is opened against the master, develop, hotfix/* or release/* branch

# - a commit is directly pushed to the pull request

name: Pull Request

on:

pull_request:

branches:

- master

- develop

- hotfix/*

- release/*

jobs:

setup:

runs-on: ubuntu-latest

steps:

- name: Use repository

uses: actions/checkout@v2

- name: Upload repository

uses: actions/upload-artifact@v2

with:

name: repository

path: |

${{ github.workspace }}/main.go

${{ github.workspace }}/main_test.go

${{ github.workspace }}/go.mod

${{ github.workspace }}/go.sum

test:

needs: setup

runs-on: ubuntu-latest

steps:

- name: Use Golang 1.17

uses: actions/setup-go@v2

with:

go-version: 1.17

- name: Download repository

uses: actions/download-artifact@v2

with:

name: repository

- name: Run tests

run: go test -v -race -timeout=180s -count=1 -cover ./...

release.yaml

# Trigger the workflow only when:

# - a new release is released which excludes pre-release and draft

name: Release

on:

release:

types:

- released

jobs:

setup:

runs-on: ubuntu-latest

steps:

- name: Use repository

uses: actions/checkout@v2

- name: Upload repository

uses: actions/upload-artifact@v2

with:

name: repository

path: |

${{ github.workspace }}/.docker

${{ github.workspace }}/.dockerignore

${{ github.workspace }}/main.go

${{ github.workspace }}/main_test.go

${{ github.workspace }}/go.mod

${{ github.workspace }}/go.sum

test:

needs: setup

runs-on: ubuntu-latest

steps:

- name: Use Golang 1.17

uses: actions/setup-go@v2

with:

go-version: 1.17

- name: Download repository

uses: actions/download-artifact@v2

with:

name: repository

- name: Run tests

run: go test -v -race -timeout=180s -count=1 -cover ./...

docker:

needs: [setup, test]

runs-on: ubuntu-latest

steps:

- name: Download repository

uses: actions/download-artifact@v2

with:

name: repository

- name: Login to DockerHub

uses: docker/login-action@v1

with:

username: ${{ secrets.DOCKERHUB_USER }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Build and push image

uses: docker/build-push-action@v2

with:

push: true

file: .infra/docker/Dockerfile

tags: ${{ github.repository }}:${{ github.event.release.tag_name }}

build-args: VER=${{ github.event.release.tag_name }}

Dockerfile

FROM golang:1.17.5-alpine3.15 as build

WORKDIR /source

COPY . .

ARG VER

RUN CGO_ENABLED=0 go build -ldflags "-s -w -X main.ver=${VER}" -o pacman main.go

FROM alpine:3.15

COPY --from=build /source/pacman /pacman

EXPOSE 8080

ENTRYPOINT ["./pacman"]

Chart.yaml

apiVersion: v2

name: pacman

type: application

icon: https://

description: This is an HTTP API

version: 0.0.0

appVersion: v0.0.3

dev.yaml

namespace: dev

env:

HTTP_ADDR: :8080

image:

name: you/pacman

tag: latest

pull: Always

deployment:

force: true

replicas: 1

container:

name: go

port: 8080

service:

type: ClusterIP

port: 8080

prod.yaml

namespace: default

env:

HTTP_ADDR: :8080

image:

name: you/pacman

tag: ""

pull: IfNotPresent

deployment:

force: false

replicas: 2

container:

name: go

port: 8080

service:

type: ClusterIP

port: 8080

sbox.yaml

namespace: sbox

env:

HTTP_ADDR: :8080

image:

name: you/pacman

tag: ""

pull: IfNotPresent

deployment:

force: false

replicas: 2

container:

name: go

port: 8080

service:

type: ClusterIP

port: 8080

configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ .Chart.Name }}

namespace: {{ .Values.namespace }}

data:

HTTP_ADDR: {{ .Values.env.HTTP_ADDR }}

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Chart.Name }}

namespace: {{ .Values.namespace }}

labels:

app: {{ .Chart.Name }}

spec:

replicas: {{ .Values.deployment.replicas }}

selector:

matchLabels:

app: {{ .Chart.Name }}

template:

metadata:

labels:

app: {{ .Chart.Name }}

{{- if .Values.deployment.force }}

annotations:

roller: {{ randAlphaNum 5 }}

{{- end }}

spec:

containers:

- name: {{ .Values.deployment.container.name }}

image: "{{ .Values.image.name }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pull }}

ports:

- containerPort: {{ .Values.deployment.container.port }}

envFrom:

- configMapRef:

name: {{ .Chart.Name }}

service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ .Chart.Name }}

namespace: {{ .Values.namespace }}

spec:

type: {{ .Values.service.type }}

selector:

app: {{ .Chart.Name }}

ports:

- port: {{ .Values.service.port }}

targetPort: {{ .Values.deployment.container.port }}

.dockerignore

.dockerignore

.gitignore

*.md

.infra/

.git/

main.go

package main

import (

"log"

"net/http"

"os"

)

var ver string

func main() {

rtr := http.DefaultServeMux

rtr.HandleFunc("/", home{}.handle)

addr := os.Getenv("HTTP_ADDR")

log.Printf("%s: info: http listen and serve pacman: %s", ver, addr)

if err := http.ListenAndServe(addr, rtr); err != nil && err != http.ErrServerClosed {

log.Printf("%s: error: http listen and serve pacman: %s", ver, err)

}

}

type home struct{}

func (h home) handle(w http.ResponseWriter, r *http.Request) {

log.Printf("%s: info: X-Request-ID: %s\n", ver, r.Header.Get("X-Request-ID"))

_, _ = w.Write([]byte("pacman" + ver))

}

main_test.go

package main

import (

"net/http"

"net/http/httptest"

"testing"

)

func Test_home_handle(t *testing.T) {

req := httptest.NewRequest(http.MethodGet, "/", nil)

res := httptest.NewRecorder()

home{}.handle(res, req)

if res.Code != http.StatusOK {

t.Error("expected 200 but got", res.Code)

}

}

Config repository

This is just to keep ArgoCD's declarative configurations, for now!

└── infra

└── argocd

└── pacman

├── dev.yaml

├── prod.yaml

├── project.yaml

└── sbox.yaml

Files

dev.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: pacman-dev

namespace: default

spec:

project: pacman

source:

repoURL: https://github.com/you/pacman

path: .infra/helm

targetRevision: HEAD

helm:

valueFiles:

- dev.yaml

destination:

namespace: dev

name: nonprod

syncPolicy:

syncOptions:

- ApplyOutOfSyncOnly=true

- CreateNamespace=true

automated:

prune: true

selfHeal: true

prod.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: pacman-prod

namespace: default

spec:

project: pacman

source:

repoURL: https://github.com/you/pacman

path: .infra/helm

targetRevision: HEAD

helm:

valueFiles:

- prod.yaml

destination:

namespace: default

name: prod

syncPolicy:

syncOptions:

- ApplyOutOfSyncOnly=true

- CreateNamespace=true

sbox.yaml

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: pacman-sbox

namespace: default

spec:

project: pacman

source:

repoURL: https://github.com/you/pacman

path: .infra/helm

targetRevision: HEAD

helm:

valueFiles:

- sbox.yaml

destination:

namespace: sbox

name: nonprod

syncPolicy:

syncOptions:

- ApplyOutOfSyncOnly=true

- CreateNamespace=true

automated:

prune: true

selfHeal: true

project.yaml

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

name: pacman

namespace: default

spec:

destinations:

- name: '*'

namespace: '*'

server: '*'

clusterResourceWhitelist:

- group: '*'

kind: '*'

orphanedResources:

warn: true

sourceRepos:

- https://github.com/you/pacman

Prepare GitHub

Add DOCKERHUB_USER and DOCKERHUB_TOKEN secrets to application repository. You need to create a token in DockerHub first to use for the DOCKERHUB_TOKEN. e.g. 86b2f4b8-816d-4d96-9666-ac0a8066e737 Also create a GitHub token called ARGOCD and tick repo scope. e.g. ghp_816dac0a8066e737966686b2f4b84d96

Prepare Kubernetes

Create clusters

$ minikube start -p argocd --vm-driver=virtualbox --memory=2000

Starting control plane node argocd in cluster argocd

$ minikube start -p nonprod --vm-driver=virtualbox

Starting control plane node nonprod in cluster nonprod

$ minikube start -p prod --vm-driver=virtualbox

Starting control plane node prod in cluster prod

Verify

$ kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

* argocd argocd argocd

nonprod nonprod nonprod

prod prod prod

$ kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority: /Users/you/.minikube/ca.crt

server: https://192.168.99.105:8443

name: argocd

- cluster:

certificate-authority: /Users/you/.minikube/ca.crt

server: https://192.168.99.106:8443

name: nonprod

- cluster:

certificate-authority: /Users/you/.minikube/ca.crt

server: https://192.168.99.107:8443

name: prod

contexts:

- context:

cluster: argocd

user: argocd

name: argocd

- context:

cluster: nonprod

user: nonprod

name: nonprod

- context:

cluster: prod

user: prod

name: prod

current-context: argocd

kind: Config

preferences: {}

users:

- name: argocd

user:

client-certificate: /Users/you/.minikube/profiles/argocd/client.crt

client-key: /Users/you/.minikube/profiles/argocd/client.key

- name: nonprod

user:

client-certificate: /Users/you/.minikube/profiles/nonprod/client.crt

client-key: /Users/you/.minikube/profiles/nonprod/client.key

- name: prod

user:

client-certificate: /Users/you/.minikube/profiles/prod/client.crt

client-key: /Users/you/.minikube/profiles/prod/client.key

Helm installation

Head Helm for Helm CLI installation. We use it for creating, linting and debugging our charts. You can use its commands against the charts I added to this example. As I said before, Helm is not required. You could just use Kubernetes manifests with some minor changes. However, Helm makes many things pleasant to work with.

ArgoCD installation

Most examples install ArgoCD to application clusters but I will have a dedicated cluster for ArgoCD as I like to keep things separate. ArgoCD will then deploy applications to other clusters. Check here for more installation options.

$ kubectl config current-context

argocd

$ kubectl apply -f https://raw.githubusercontent.com/argoproj/argo-cd/v2.2.4/manifests/install.yaml

If you wish to uninstall it you can use command below.

$ kubectl delete -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/argocd-application-controller-0 1/1 Running 7 3d16h

pod/argocd-dex-server-97b8cff96-xtz4n 1/1 Running 0 3d16h

pod/argocd-redis-584f4df7d7-qpl6m 1/1 Running 0 3d16h

pod/argocd-repo-server-7b46f67c87-9gjfz 1/1 Running 9 3d16h

pod/argocd-server-864d74fcbb-vcwnf 1/1 Running 3 3d16h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/argocd-dex-server ClusterIP 10.101.97.1525556/TCP,5557/TCP,5558/TCP 3d16h

service/argocd-metrics ClusterIP 10.108.140.338082/TCP 3d16h

service/argocd-redis ClusterIP 10.108.70.1396379/TCP 3d16h

service/argocd-repo-server ClusterIP 10.110.39.1058081/TCP,8084/TCP 3d16h

service/argocd-server ClusterIP 10.99.239.980/TCP,443/TCP 3d16h

service/argocd-server-metrics ClusterIP 10.111.75.2088083/TCP 3d16h

service/kubernetes ClusterIP 10.96.0.1443/TCP 3d16h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/argocd-dex-server 1/1 1 1 3d16h

deployment.apps/argocd-redis 1/1 1 1 3d16h

deployment.apps/argocd-repo-server 1/1 1 1 3d16h

deployment.apps/argocd-server 1/1 1 1 3d16h

NAME DESIRED CURRENT READY AGE

replicaset.apps/argocd-dex-server-97b8cff96 1 1 1 3d16h

replicaset.apps/argocd-redis-584f4df7d7 1 1 1 3d16h

replicaset.apps/argocd-repo-server-7b46f67c87 1 1 1 3d16h

replicaset.apps/argocd-server-864d74fcbb 1 1 1 3d16h

NAME READY AGE

statefulset.apps/argocd-application-controller 1/1 3d16h

Let's expose it so we can access it using a browser. By the way, you can install ArgoCD as LoadBalancer and avoid port forwarding.

$ kubectl port-forward svc/argd-server 8443:443

Extract password for login using command below.

$ kubectl get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d; echo

// Assume you got gYzYfJ5kxzJsdZGK

You can now head https://localhost:8443/ and use admin:gYzYfJ5kxzJsdZGK to login.

Use command below to install argocd command. This will help us to avoid creating ArgoCD resources manually in UI.

$ brew tap argoproj/tap && brew install argoproj/tap/argocd

$ argocd version

argocd: v2.2.5+8f981cc.dirty

BuildDate: 2022-02-05T05:39:12Z

GitCommit: 8f981ccfcf942a9eb00bc466649f8499ba0455f5

GitTreeState: dirty

GoVersion: go1.17.6

Compiler: gc

Platform: darwin/amd64

FATA[0000] Argo CD server address unspecified

Create resources

First we need to login.

$ argocd --insecure login 127.0.0.1:8443

Username: admin

Password: gYzYfJ5kxzJsdZGK

'admin:login' logged in successfully

Context '127.0.0.1:8443' updated

Add clusters. This is CLI only.

$ argocd cluster add nonprod

INFO[0002] ServiceAccount "argocd-manager" already exists in namespace "kube-system"

INFO[0002] ClusterRole "argocd-manager-role" updated

INFO[0002] ClusterRoleBinding "argocd-manager-role-binding" updated

Cluster 'https://192.168.99.106:8443' added

$ argocd cluster add prod

INFO[0002] ServiceAccount "argocd-manager" already exists in namespace "kube-system"

INFO[0002] ClusterRole "argocd-manager-role" updated

INFO[0002] ClusterRoleBinding "argocd-manager-role-binding" updated

Cluster 'https://192.168.99.107:8443' added

Add application repository.

$ argocd repo add https://github.com/you/pacman \

--username you \

--password ghp_816dac0a8066e737966686b2f4b84d96 \

--type git

Create project. As you can see we are reading from the config repository.

$ argocd proj create --file ~/config/infra/argocd/pacman/project.yaml

Create applications. As you can see we are reading from the application repository.

$ argocd app create --file ~/pacman/.infra/helm/dev.yaml && \

argocd app create --file ~/pacman/.infra/helm/prod.yaml && \

argocd app create --file ~/pacman/.infra/helm/sbox.yaml

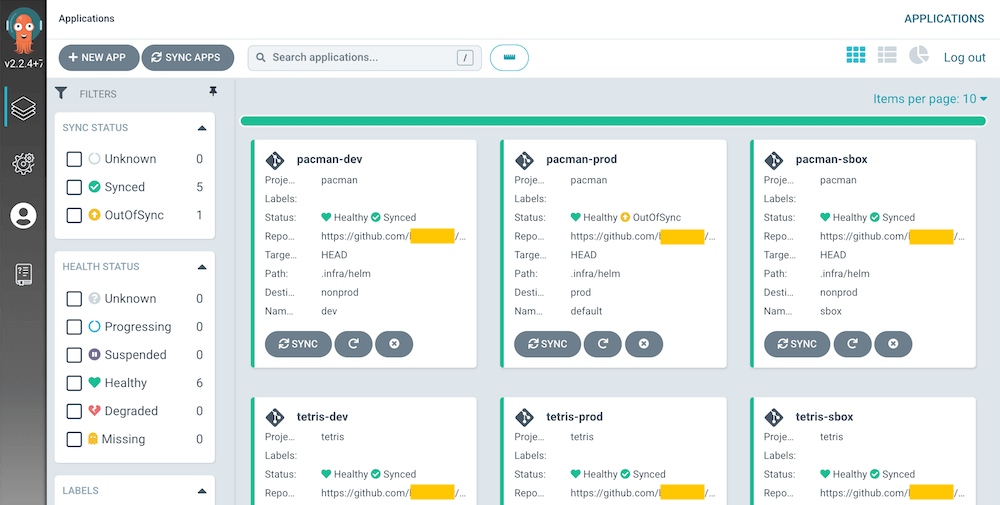

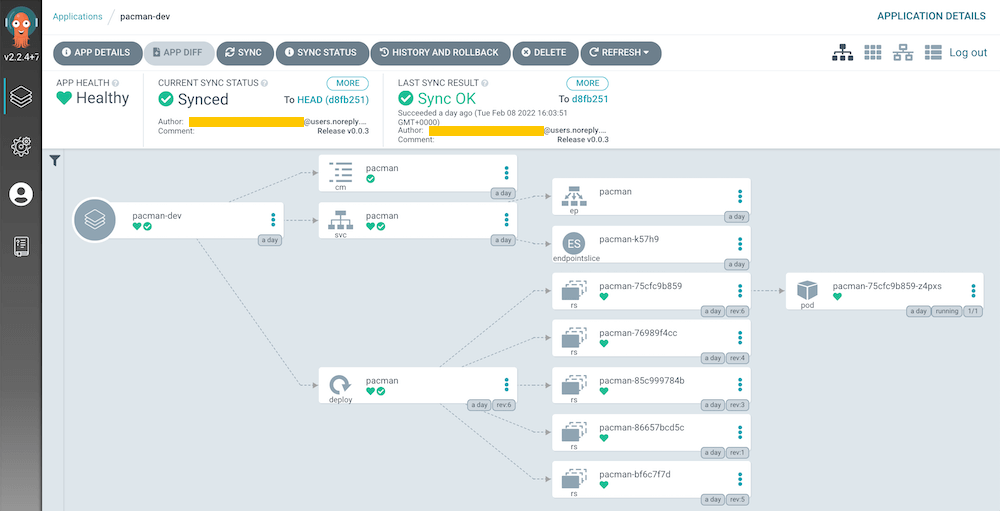

Screenshots

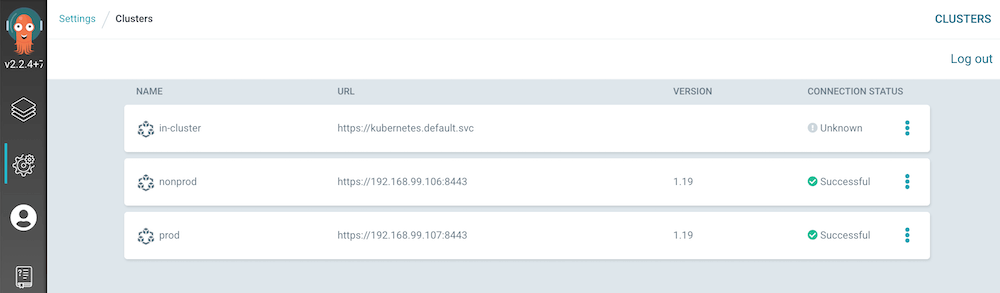

Cluster

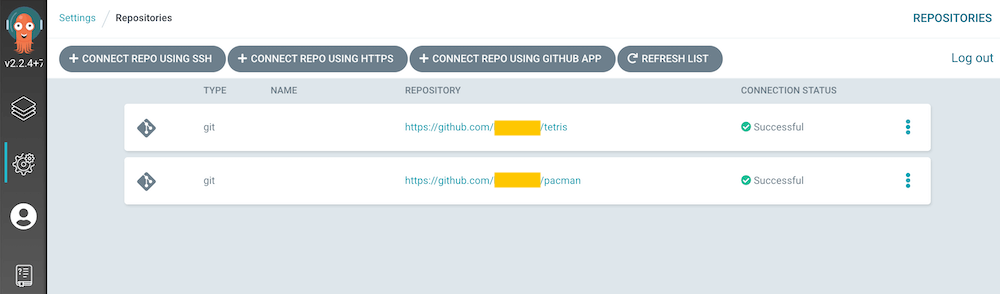

Repository

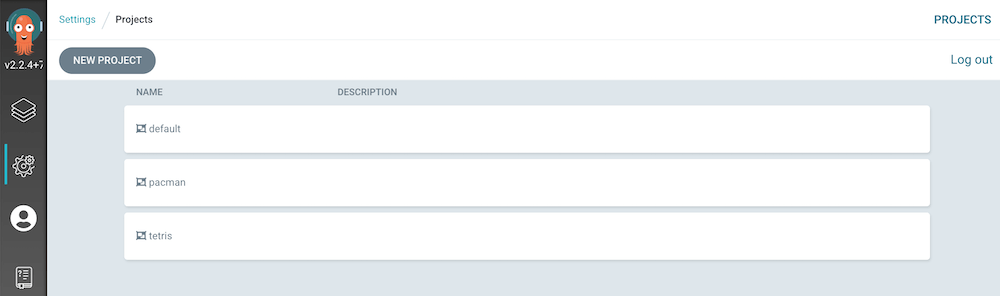

Project

Application