Managing application logs with EFK stack (Elasticsearch, Fluent-bit, Kibana) in Kubernetes

12/08/2021 - DOCKER, ELASTICSEARCH, GO, KUBERNETES

In this example we are going to use a Golang application that echos JSON formatted logs. The aim is to catch all those logs and visualise them for debugging purposes. For that, we will use Fluent-bit to catch logs and push them to Elasticsearch. Afterwards, we will use Kibana to visualise them. All these take place in a Kubernetes cluster.

Our EFK stack is in a namespace called monitoring in Kubernetes. Fluent-bit will be able to read logs from different namespaces so for instance our dummy application is in dev namespace. Fluent-bit reads logs from the /var/log/containers directory where namespace specific files are kept. You can use Exclude_Path config to exclude certain namespaces like I did. For the sake of high availability, there will be three Elasticsearch pods running.

Structure

├── Makefile

├── deploy

│ └── k8s

│ ├── api.yaml

│ ├── elasticsearch.yaml

│ ├── fluentbit.yaml

│ ├── kibana.yaml

│ └── monitoring.yaml

├── docker

│ └── ci

│ └── Dockerfile

├── go.mod

└── main.go

Files

Makefile

# LOCAL -----------------------------------------------------------------------

.PHONY: api-run

api-run:

go run -race main.go

# DOCKER ----------------------------------------------------------------------

.PHONY: docker-push

docker-push:

docker build -t you/efk:latest -f ./docker/ci/Dockerfile .

docker push you/efk:latest

docker rmi you/efk:latest

docker system prune --volumes --force

# KUBERNETES ------------------------------------------------------------------

.PHONY: api-deploy

api-deploy:

kubectl apply -f deploy/k8s/api.yaml

.PHONY: monitoring-deploy

monitoring-deploy:

kubectl apply -f deploy/k8s/monitoring.yaml

kubectl apply -f deploy/k8s/elasticsearch.yaml

kubectl apply -f deploy/k8s/kibana.yaml

kubectl apply -f deploy/k8s/fluentbit.yaml

.PHONY: kube-api-port-forward

kube-api-port-forward:

kubectl --namespace=dev port-forward service/api 8080:80

.PHONY: kube-elasticsearch-port-forward

kube-elasticsearch-port-forward:

kubectl --namespace=monitoring port-forward service/elasticsearch 9200:9200

.PHONY: kube-kibana-port-forward

kube-kibana-port-forward:

kubectl --namespace=monitoring port-forward service/kibana 5601:5601

.PHONY: kube-test

kube-test:

curl --request GET http://0.0.0.0:8080/

curl --request GET http://0.0.0.0:8080/info

curl --request GET http://0.0.0.0:8080/warning

curl --request GET http://0.0.0.0:8080/error

main.go

package main

import (

"net/http"

"github.com/sirupsen/logrus"

)

func main() {

// Bootstrap logger.

logrus.SetLevel(logrus.InfoLevel)

logrus.SetFormatter(&logrus.JSONFormatter{})

// Bootstrap HTTP handler.

hom := home{}

// Bootstrap HTTP router.

rtr := http.DefaultServeMux

rtr.HandleFunc("/", hom.home)

rtr.HandleFunc("/info", hom.info)

rtr.HandleFunc("/warning", hom.warning)

rtr.HandleFunc("/error", hom.error)

// Start HTTP server.

logrus.Info("application started")

if err := http.ListenAndServe(":8080", rtr); err != nil && err != http.ErrServerClosed {

logrus.Fatal("application crushed")

}

logrus.Info("application stopped")

}

type home struct {}

func (h home) home(w http.ResponseWriter, r *http.Request) {

_, _ = w.Write([]byte("home page"))

}

func (h home) info(w http.ResponseWriter, r *http.Request) {

logrus.Info("welcome to info page")

_, _ = w.Write([]byte("info page"))

}

func (h home) warning(w http.ResponseWriter, r *http.Request) {

logrus.Warning("welcome to warning page")

_, _ = w.Write([]byte("warning page"))

}

func (h home) error(w http.ResponseWriter, r *http.Request) {

logrus.Error("welcome to error page")

_, _ = w.Write([]byte("error page"))

}

Dockerfile

FROM golang:1.15-alpine3.12 as build

WORKDIR /source

COPY .. .

RUN CGO_ENABLED=0 go build -ldflags "-s -w" -o bin/main main.go

FROM alpine:3.12

COPY --from=build /source/bin/main /main

EXPOSE 8080

ENTRYPOINT ["/main"]

api.yaml

apiVersion: v1

kind: Namespace

metadata:

name: dev

---

apiVersion: v1

kind: Service

metadata:

name: api

namespace: dev

spec:

type: ClusterIP

selector:

app: api

ports:

- name: http

protocol: TCP

port: 80

targetPort: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: api

namespace: dev

labels:

app: api

spec:

replicas: 1

selector:

matchLabels:

app: api

template:

metadata:

labels:

app: api

spec:

containers:

- name: golang

image: you/efk:latest

ports:

- name: http

protocol: TCP

containerPort: 8080

monitoring.yaml

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

elasticsearch.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: monitoring

labels:

app: elasticsearch

spec:

clusterIP: None

selector:

app: elasticsearch

ports:

- name: http

protocol: TCP

port: 9200

- name: node

protocol: TCP

port: 9300

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch-node

namespace: monitoring

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.2.0

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 500m

memory: 1Gi

ports:

- name: http

protocol: TCP

containerPort: 9200

- name: node

protocol: TCP

containerPort: 9300

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-monitoring

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "elasticsearch-node-0.elasticsearch,elasticsearch-node-1.elasticsearch,elasticsearch-node-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "elasticsearch-node-0,elasticsearch-node-1,elasticsearch-node-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers:

- name: chown

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

- name: sysctl

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

storageClassName: standard

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: monitoring

labels:

app: kibana

spec:

selector:

app: kibana

ports:

- name: http

protocol: TCP

port: 5601

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: monitoring

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.2.0

resources:

limits:

cpu: 1000m

memory: 1Gi

requests:

cpu: 500m

memory: 1Gi

ports:

- name: http

protocol: TCP

containerPort: 5601

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch:9200

fluentbit.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentbit

namespace: monitoring

labels:

app: fluentbit

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentbit

labels:

app: fluentbit

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: fluentbit

roleRef:

kind: ClusterRole

name: fluentbit

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentbit

namespace: monitoring

---

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentbit-config

namespace: monitoring

labels:

k8s-app: fluentbit

data:

fluent-bit.conf: |

[SERVICE]

Flush 5

Log_Level info

Daemon Off

Parsers_File parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-kubernetes.conf

@INCLUDE filter-kubernetes.conf

@INCLUDE output-elasticsearch.conf

input-kubernetes.conf: |

[INPUT]

Name tail

Tag kube.*

Path /var/log/containers/*.log

Exclude_Path /var/log/containers/*_kube-system_*.log,/var/log/containers/*_kubernetes-dashboard_*.log,/var/log/containers/*_monitoring_*.log

Parser docker

DB /var/log/flb_kube.db

Mem_Buf_Limit 5MB

Skip_Long_Lines On

Refresh_Interval 10

filter-kubernetes.conf: |

[FILTER]

Name kubernetes

Match kube.*

Kube_URL https://kubernetes.default.svc:443

Kube_CA_File /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Kube_Token_File /var/run/secrets/kubernetes.io/serviceaccount/token

Kube_Tag_Prefix kube.var.log.containers.

Merge_Log On

Merge_Log_Key log_field

Merge_Log_Trim On

K8S-Logging.Parser On

K8S-Logging.Exclude Off

output-elasticsearch.conf: |

[OUTPUT]

Name es

Host ${FLUENT_ELASTICSEARCH_HOST}

Port ${FLUENT_ELASTICSEARCH_PORT}

Match *

Index kubernetes-logs

Type json

Replace_Dots On

Retry_Limit False

parsers.conf: |

[PARSER]

Name docker

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentbit

namespace: monitoring

labels:

app: fluentbit

spec:

selector:

matchLabels:

app: fluentbit

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

app: fluentbit

spec:

serviceAccount: fluentbit

serviceAccountName: fluentbit

terminationGracePeriodSeconds: 30

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentbit

image: fluent/fluent-bit:1.3.11

ports:

- containerPort: 2020

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

volumeMounts:

- name: fluentbit-config

mountPath: /fluent-bit/etc/

- name: fluentbit-log

mountPath: /var/log

- name: fluentbit-lib

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: fluentbit-config

configMap:

name: fluentbit-config

- name: fluentbit-log

hostPath:

path: /var/log

- name: fluentbit-lib

hostPath:

path: /var/lib/docker/containers

Setup

Push the application's Docker image to DockerHub with $ make docker-push command.

This setup requires a lot of system resources so keep them as high as possible.

$ minikube start --vm-driver=virtualbox --memory 5000 --cpus=3

Prepare monitoring environment.

$ make monitoring-deploy

Verifying the setup.

$ kubectl --namespace=monitoring get all

NAME READY STATUS RESTARTS AGE

pod/elasticsearch-node-0 1/1 Running 1 22h

pod/elasticsearch-node-1 1/1 Running 1 22h

pod/elasticsearch-node-2 1/1 Running 1 22h

pod/fluentbit-8z75t 1/1 Running 0 22h

pod/kibana-7f8c5f55c5-94wj6 1/1 Running 1 22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elasticsearch ClusterIP None9200/TCP,9300/TCP 22h

service/kibana ClusterIP 10.107.77.1735601/TCP 22h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/fluentbit 1 1 1 1 14s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kibana 1/1 1 1 22h

NAME DESIRED CURRENT READY AGE

replicaset.apps/kibana-7f8c5f55c5 1 1 1 22h

NAME READY AGE

statefulset.apps/elasticsearch-node 3/3 22h

Prepare dev environment.

$ make api-deploy

Verifying the setup.

$ kubectl --namespace=dev get all

NAME READY STATUS RESTARTS AGE

pod/api-5b4b8fc569-msnjr 1/1 Running 1 22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/api ClusterIP 10.104.8.18480/TCP 22h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/api 1/1 1 1 22h

NAME DESIRED CURRENT READY AGE

replicaset.apps/api-5b4b8fc569 1 1 1 22h

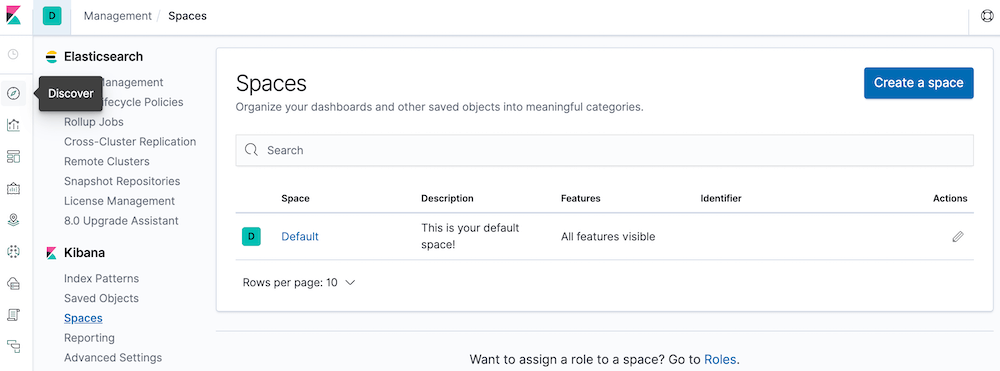

Allow public access to the services.

$ make kube-api-port-forward

$ make kube-elasticsearch-port-forward

$ make kube-kibana-port-forward

Let's verify that the Elasticsearch is setup fine.

$ curl http://127.0.0.1:9200

{

"name" : "elasticsearch-node-1",

"cluster_name" : "k8s-monitoring",

"cluster_uuid" : "f5aN9mbdT9KBJBF0zfGjiA",

"version" : {

"number" : "7.2.0",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "508c38a",

"build_date" : "2019-06-20T15:54:18.811730Z",

"build_snapshot" : false,

"lucene_version" : "8.0.0",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}

$ curl http://127.0.0.1:9200/_cluster/state?pretty

{

"cluster_name" : "k8s-monitoring",

"cluster_uuid" : "f5aN9mbdT9KBJBF0zfGjiA",

"version" : 582,

"state_uuid" : "vMw5Xn-4Rwau_ycwuvLzcg",

"master_node" : "TOw9L-MnTpGMVMw8w9VxJw",

"blocks" : { },

"nodes" : {

"SIhpI-6GQt6B1EU1QWNDZQ" : {

"name" : "elasticsearch-node-2",

"ephemeral_id" : "MPO9j68HSBOrNMNxcGZfNw",

"transport_address" : "172.17.0.7:9300",

"attributes" : {

"ml.machine_memory" : "1073741824",

"ml.max_open_jobs" : "20",

"xpack.installed" : "true"

}

},

"TOw9L-MnTpGMVMw8w9VxJw" : {

"name" : "elasticsearch-node-1",

"ephemeral_id" : "9v717xsYRQi81XJ4sz_aVQ",

"transport_address" : "172.17.0.8:9300",

"attributes" : {

"ml.machine_memory" : "1073741824",

"xpack.installed" : "true",

"ml.max_open_jobs" : "20"

}

},

"GmPm6Qh5TEiQ6INbsJI7Aw" : {

"name" : "elasticsearch-node-0",

"ephemeral_id" : "2gytG63MQmOD_X90_XZPAg",

"transport_address" : "172.17.0.6:9300",

"attributes" : {

"ml.machine_memory" : "1073741824",

"ml.max_open_jobs" : "20",

"xpack.installed" : "true"

}

}

}

}

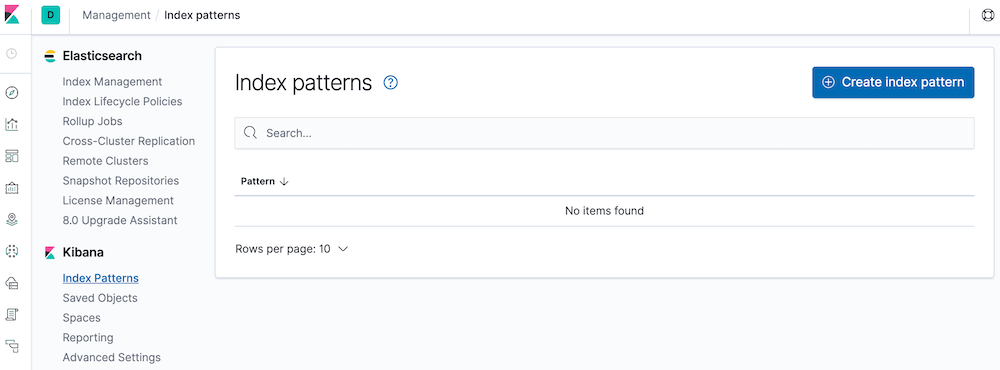

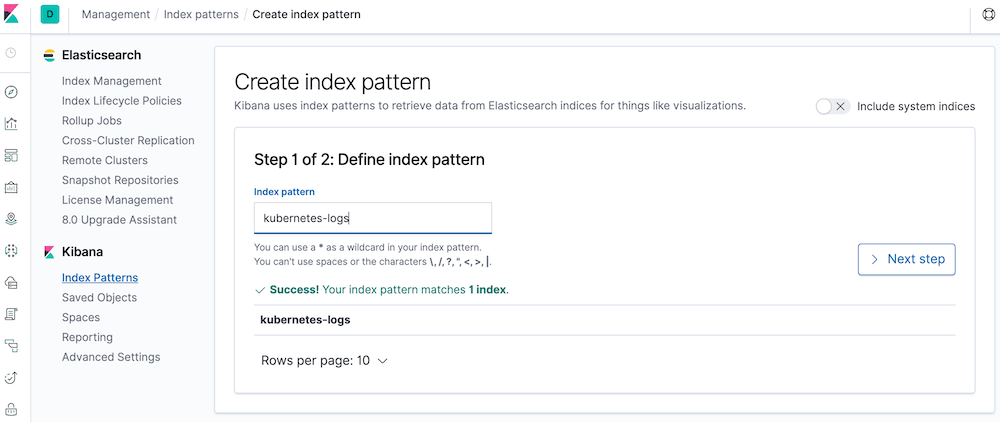

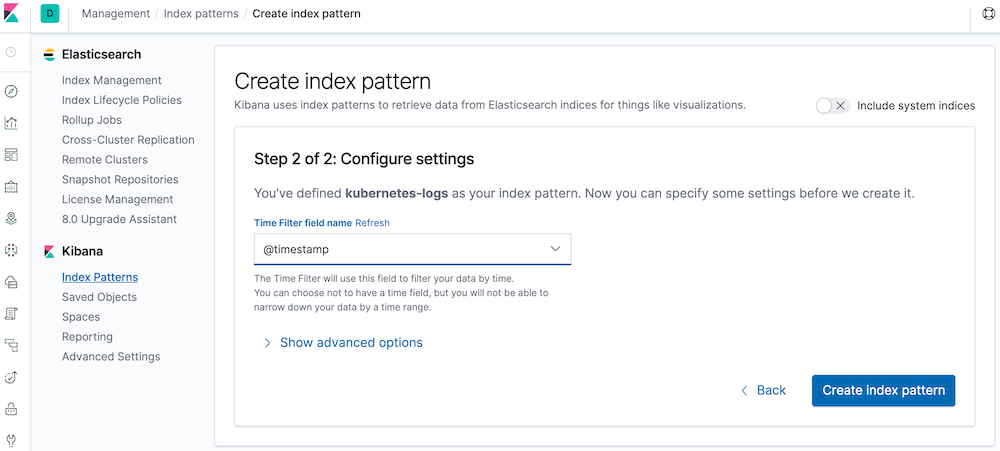

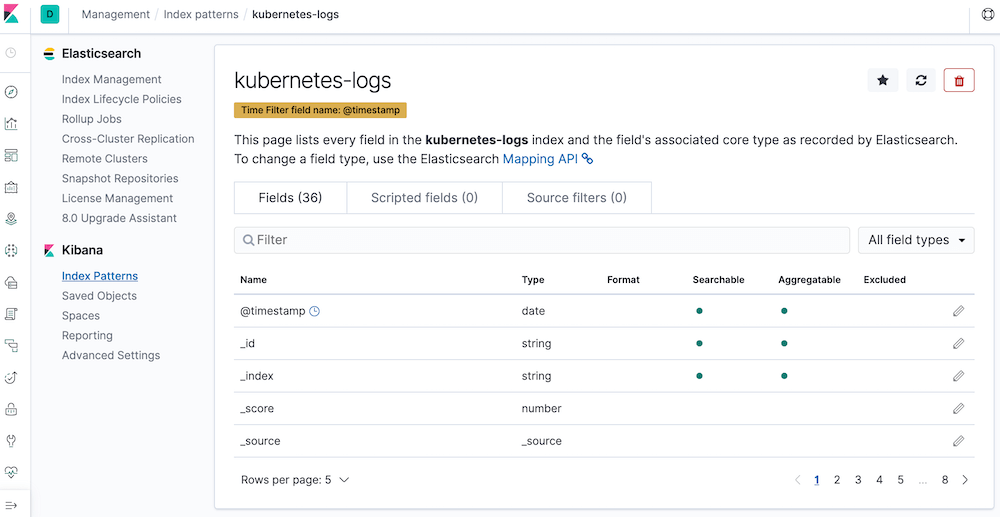

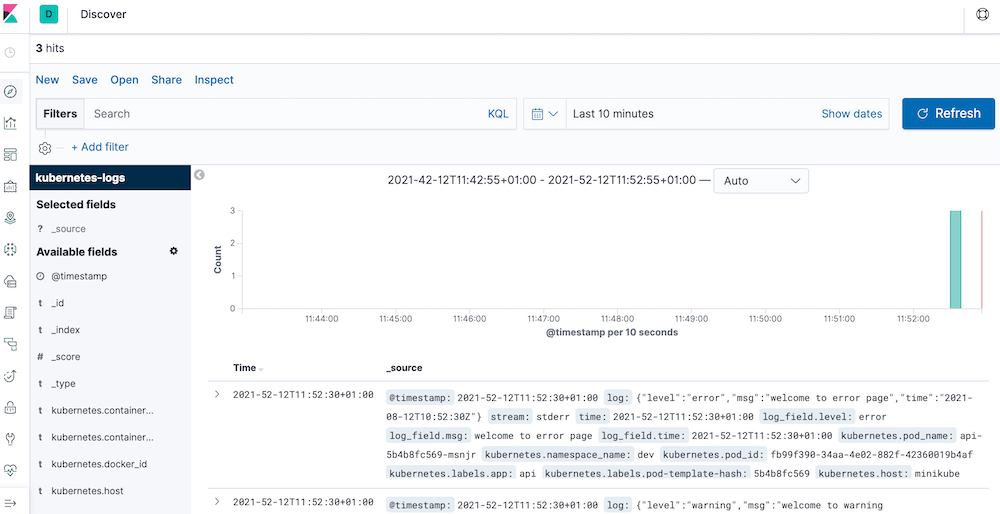

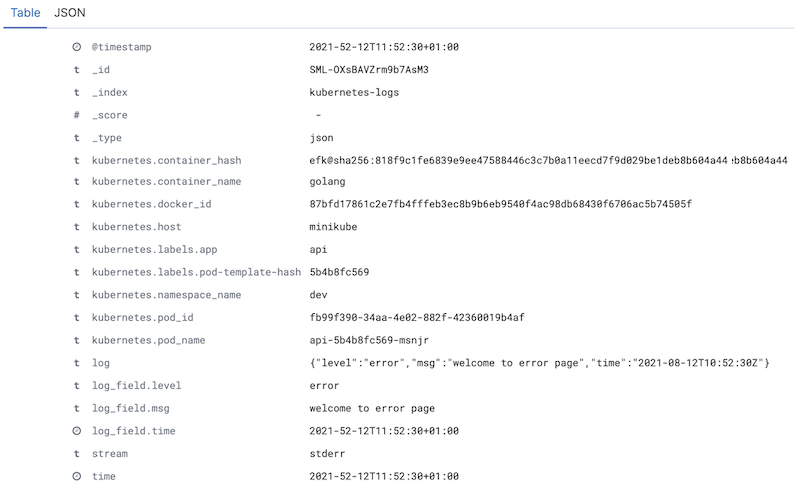

Test

Run $ make kube-test to produce some dummy logs so that there is something visible in the Kibana.