Diverting requests to relevant servers with Nginx reverse proxy and logging client's request headers

31/03/2019 - DOCKER, LINUX, SYMFONY, NGINX

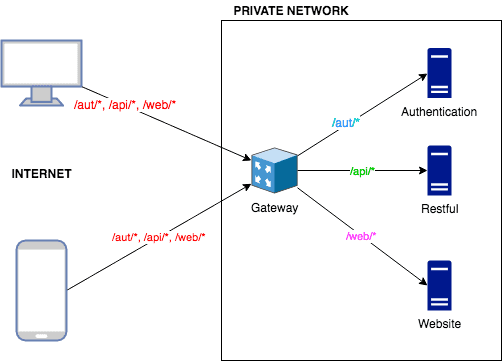

In this example we are going to use Nginx Reverse Proxy feature to forward incoming requests to relevant application servers. We will check the request URL and decide which application to forward the request to. This approach is often used if you don't want to expose your internal network to the Internet. The solution is to have a single server and let it handle the traffic for you. The example is based on Docker containers and all the containers are running on a Linux machine. For more information read Nginx Reverse Proxy page.

Flow

We have 3 private applications servers (Authentication, Restful and Website) on our network and 1 public Nginx reverse proxy server (Gateway).

As you can see above, all the requests are going to "Gateway" nginx server and then based on the request URL it decides where to forward the traffic to.

- The request for

/aut/*are forwarded to "authentication" application server. - The request for

/api/*are forwarded to "restful" application server. - The request for

/web/*are forwarded to "website" application server.

Any other unmatched request URLs results in "404 Bad Request" response.

Notes

- The public Gateway server should be open for the Internet access and use HTTPS, not HTTP - I kept HTTP as well just for demonstration purposes.

- Direct access to all the other private servers from the Internet must be blocked. Based on the requirements, both HTTP and HTTPS can be enabled. Currently the Gateway server directs all requests to HTTP just for demonstration purposes.

- All private servers will log the request ID of Gateway server as well as their own request IDs.

- The actual client and proxy server related information are passed on to the private servers and will be available in global

$_SERVERvariable asHTTP_X_****headers and as an environmental variables. See the "Optional" Symfony example below. - This example uses Docker containers and all the Nginx server logs are sent to

stdoutso if you wish you can use$ docker logs -f {nginx_container_name}command to read the logs. - To provide better readability the Nginx logs are written in JSON format in app servers.

Requirements

- The request ID of the Gateway is forwarded to the application servers so that it presents in log files as well as the actual request ID of the application server. This helps us tracking a client request and its end-to-end journey. Note: After receiving the request from Gateway, if an application calls another application, make sure that you add the

x-request-trace-idheader to request headers otherwise the trace will be lost. - The request ID of the Gateway is sent back to the client as part of response headers. This also helps us finding the logs of associated to the client's request otherwise we wouldn't know which log belongs to which user's request.

Optional

If you want to log any custom header data coming from Nginx in your Symfony application you can do the following.

# service.yaml

parameters:

env(HTTP_X_REQUEST_ID): ~

env(HTTP_X_REQUEST_TRACE_ID): ~

services:

App\Logger\XRequestIdProcessor:

arguments:

$xRequestId: '%env(string:HTTP_X_REQUEST_ID)%'

$xRequestTraceId: '%env(string:HTTP_X_REQUEST_TRACE_ID)%'

tags:

- { name: monolog.processor }

# Custom logger

declare(strict_types=1);

namespace App\Logger;

class XRequestIdProcessor

{

private $xRequestId;

private $xRequestTraceId;

public function __construct(?string $xRequestId, ?string $xRequestTraceId)

{

$this->xRequestId = $xRequestId;

$this->xRequestTraceId = $xRequestTraceId;

}

public function __invoke(array $record)

{

$record['context']['x_request_id'] = $this->xRequestId;

$record['context']['x_request_trace_id'] = $this->xRequestTraceId;

return $record;

}

}

# The log

[06-Apr-2019 08:05:28] WARNING: [pool www] child 9 said into stdout: "[2019-04-06 08:05:28] request.INFO: Matched route "index". {"route":"index","route_parameters":{"_route":"index","_controller":"App\\Controller\\DockerController::index"},"request_uri":"http://192.168.99.30/","method":"GET","x_request_id":"bce165ceae2ff2b3d58999b834416301","x_request_trace_id":"c83354e6b40bc1ac1959e642bf892562"} []"

You can also use $request->headers->get('x-request-*****') method in relevant classes such as a controller, event listener so on.

Docker

The private application servers use "docker compose" because each have Nginx and PHP-FPM containers attached to them. However, the public Nginx proxy server is on its own a Nginx container so it is just a "Dockerfile" solution.

$ docker ps

CONTAINER ID IMAGE PORTS NAMES

afbbfdc798e3 dev_aut_nginx 0.0.0.0:5080->80/tcp, 0.0.0.0:5443->443/tcp dev_aut_nginx_1

32cf3c89ef00 dev_aut_php 9000/tcp dev_aut_php_1

eb570837b30b dev_res_nginx 0.0.0.0:6080->80/tcp, 0.0.0.0:6443->443/tcp dev_res_nginx_1

5c9d060723fd dev_res_php 9000/tcp dev_res_php_1

997a1906f891 dev_web_nginx 0.0.0.0:7080->80/tcp, 0.0.0.0:7443->443/tcp dev_web_nginx_1

963af53f8c0c dev_web_php 9000/tcp dev_web_php_1

df86e2c2605d gateway:nginx 0.0.0.0:8080->80/tcp, 0.0.0.0:8443->443/tcp gateway_1

We used commands below to build and run Nginx reverse proxy server.

$ docker build -t gateway:nginx .

$ docker run --name gateway_1 -p 8080:80 -p 8443:443 -d gateway:nginx

If you want to test all these servers on host OS directly, you can use commands below.

# AUTHENTICATION

curl -i http://localhost:5080

curl --insecure -i https://localhost:5443

# RESTFUL

curl -i http://localhost:6080

curl --insecure -i https://localhost:6443

# WEBSITE

curl -i http://localhost:7080

curl --insecure -i https://localhost:7443

# GATEWAY

curl -i http://localhost:8080

curl --insecure -i https://localhost:8443

The ip address of our host Debian OS server is 192.168.99.30 so you can use address below to access public Gateway reverse proxy server.

http://192.168.99.30:8080/{aut|api|web}/*

https://192.168.99.30:8443/{aut|api|web}/*

Files

Gateway

Dockerfile

FROM nginx:1.15.8-alpine

RUN apk add --no-cache bash

RUN rm -rf /var/cache/apk/*

COPY app.conf /etc/nginx/conf.d/default.conf

COPY nginx.conf /etc/nginx/nginx.conf

COPY app_ssl.crt /etc/ssl/certs/app_ssl.crt

COPY app_ssl.key /etc/ssl/private/app_ssl.key

app.conf

server {

listen 80;

listen 443 default_server ssl;

ssl_certificate /etc/ssl/certs/app_ssl.crt;

ssl_certificate_key /etc/ssl/private/app_ssl.key;

# Send traceable request id to client

add_header X-Request-Trace-ID $request_id;

# Pass traceable request id to upstream server

proxy_set_header X-Request-Trace-ID $request_id;

# Proxy server's host name/IP

proxy_set_header Host $host;

# Upstream server's schema

proxy_set_header X-Forwarded-Proto $scheme;

# Upstream server's host name(:port)/IP(:port)

proxy_set_header X-Forwarded-Host $proxy_host;

# Upstream server's port

proxy_set_header X-Forwarded-Port $proxy_port;

# Client's IP

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# Any request URI starts with "/aut/*" gets forwarded to "authentication" application

location ~ ^/aut/(.*) {

proxy_pass http://172.17.0.1:5080/$1$is_args$args;

# proxy_pass https://172.17.0.1:5443/$1$is_args$args;

}

# Any request URI starts with "/api/*" gets forwarded to "restful" application

location ~ ^/api/(.*) {

proxy_pass http://172.17.0.1:6080/$1$is_args$args;

# proxy_pass https://172.17.0.1:6443/$1$is_args$args;

}

# Any request URI starts with "/web/*" gets forwarded to "website" application

location ~ ^/web/(.*) {

proxy_pass http://172.17.0.1:7080/$1$is_args$args;

# proxy_pass https://172.17.0.1:7443/$1$is_args$args;

}

# Return 404 for every other request URIs

location / {

return 404;

}

}

nginx.conf

user nginx;

# 1 worker process per CPU core.

# Check max: $ grep processor /proc/cpuinfo | wc -l

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

# Tells worker processes how many people can be served simultaneously.

# worker_process (2) * worker_connections (2048) = 4096

# Check max: $ ulimit -n

worker_connections 2048;

# Connection processing method. The epoll is efficient method used on Linux 2.6+

use epoll;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format json_combined escape=json

'{'

'"time_local":"$time_local",'

'"client_ip":"$http_x_forwarded_for",'

'"remote_addr":"$remote_addr",'

'"remote_user":"$remote_user",'

'"request":"$request",'

'"status": "$status",'

'"body_bytes_sent":"$body_bytes_sent",'

'"request_time":"$request_time",'

'"http_referrer":"$http_referer",'

'"http_user_agent":"$http_user_agent",'

'"request_id":"$request_id"'

'}';

access_log /var/log/nginx/access.log json_combined;

# Used to reduce 502 and 504 HTTP errors.

fastcgi_buffers 8 16k;

fastcgi_buffer_size 32k;

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

# The sendfile allows transfer data from a file descriptor to another directly in kernel.

# Combination of sendfile and tcp_nopush ensures that the packets are full before being sent to the client.

# This reduces network overhead and speeds the way files are sent.

# The tcp_nodelay forces the socket to send the data.

sendfile on;

tcp_nopush on;

tcp_nodelay on;

# The client connection can stay open on the server up to given seconds.

keepalive_timeout 65;

# Hides Nginx server version in headers.

server_tokens off;

# Sets the maximum size of the types hash tables.

types_hash_max_size 2048;

# Compress files on the fly before transmitting.

# Compressed files are then decompressed by the browsers that support it.

gzip on;

include /etc/nginx/conf.d/*.conf;

}

Authentication

Files of other two application servers are same so I won't add those. However obviously only the small bits in docker related files differ. Such as: (aut, api, web) and exposed ports. Also I won't add PHP-FPM files because they are not relevant.

docker-compose.yml

version: "3"

services:

aut_php:

build:

context: "./php"

hostname: "aut-php"

volumes:

- "../..:/app:consistent"

environment:

PS1: "\\u@\\h:\\w\\$$ "

aut_nginx:

build:

context: "./nginx"

hostname: "aut-nginx"

ports:

- "5080:80"

- "5443:443"

volumes:

- "../..:/app:consistent"

depends_on:

- "aut_php"

environment:

PS1: "\\u@\\h:\\w\\$$ "

Dockerfile

FROM nginx:1.15.8-alpine

RUN apk add --no-cache bash

RUN rm -rf /var/cache/apk/*

COPY app.conf /etc/nginx/conf.d/default.conf

COPY nginx.conf /etc/nginx/nginx.conf

COPY app_ssl.crt /etc/ssl/certs/app_ssl.crt

COPY app_ssl.key /etc/ssl/private/app_ssl.key

app.conf

server {

listen 80;

server_name localhost;

root /app/public;

listen 443 default_server ssl;

ssl_certificate /etc/ssl/certs/app_ssl.crt;

ssl_certificate_key /etc/ssl/private/app_ssl.key;

location / {

try_files $uri /index.php$is_args$args;

}

location ~ ^/index\.php(/|$) {

fastcgi_pass aut_php:9000;

fastcgi_split_path_info ^(.+\.php)(/.*)$;

fastcgi_hide_header X-Powered-By;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $realpath_root$fastcgi_script_name;

fastcgi_param DOCUMENT_ROOT $realpath_root;

fastcgi_param HTTP_X_REQUEST_ID $request_id;

internal;

}

location ~ \.php$ {

return 404;

}

}

nginx.conf

user nginx;

# 1 worker process per CPU core.

# Check max: $ grep processor /proc/cpuinfo | wc -l

worker_processes 2;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

# Tells worker processes how many people can be served simultaneously.

# worker_process (2) * worker_connections (2048) = 4096

# Check max: $ ulimit -n

worker_connections 2048;

# Connection processing method. The epoll is efficient method used on Linux 2.6+

use epoll;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format json_combined escape=json

'{'

'"time_local":"$time_local",'

'"client_ip":"$http_x_forwarded_for",'

'"remote_addr":"$remote_addr",'

'"remote_user":"$remote_user",'

'"request":"$request",'

'"status": "$status",'

'"body_bytes_sent":"$body_bytes_sent",'

'"request_time":"$request_time",'

'"http_referrer":"$http_referer",'

'"http_user_agent":"$http_user_agent",'

'"request_id":"$request_id",'

'"request_trace_id":"$http_x_request_trace_id"'

'}';

access_log /var/log/nginx/access.log json_combined;

# Used to reduce 502 and 504 HTTP errors.

fastcgi_buffers 8 16k;

fastcgi_buffer_size 32k;

fastcgi_connect_timeout 300;

fastcgi_send_timeout 300;

fastcgi_read_timeout 300;

# The sendfile allows transfer data from a file descriptor to another directly in kernel.

# Combination of sendfile and tcp_nopush ensures that the packets are full before being sent to the client.

# This reduces network overhead and speeds the way files are sent.

# The tcp_nodelay forces the socket to send the data.

sendfile on;

tcp_nopush on;

tcp_nodelay on;

# The client connection can stay open on the server up to given seconds.

keepalive_timeout 65;

# Hides Nginx server version in headers.

server_tokens off;

# Disable content-type sniffing on some browsers.

add_header X-Content-Type-Options nosniff;

# Enables the Cross-site scripting (XSS) filter built into most recent web browsers.

# If user disables it on the browser level, this role re-enables it automatically on serve level.

add_header X-XSS-Protection '1; mode=block';

# Prevent the browser from rendering the page inside a frame/iframe to avoid clickjacking.

add_header X-Frame-Options DENY;

# Enable HSTS to prevent SSL stripping.

add_header Strict-Transport-Security 'max-age=31536000; includeSubdomains; preload';

# Prevent browser sending the referrer header when navigating from HTTPS to HTTP.

add_header 'Referrer-Policy' 'no-referrer-when-downgrade';

# Sets the maximum size of the types hash tables.

types_hash_max_size 2048;

# Compress files on the fly before transmitting.

# Compressed files are then decompressed by the browsers that support it.

gzip on;

include /etc/nginx/conf.d/*.conf;

}

Tests and Logs

There is no real difference between HTTP and HTTPS logs so I'll just add HTTP logs to keep the blog short. Also pay attention to log entries against "direct access" and "gateway access" because they carry very different information. What we are interested in is the logs related to "gateway access" because it will the one in production.

Direct access

We are directly accessing to the application servers without going through the Gateway proxy server.

$ curl -i "http://localhost:5080" # Authentication

# $ curl -i "http://localhost:5080/other"

# $ curl -i "http://localhost:5080/other?a=1&b=2"

# Application Nginx log

{"time_local":"06/Apr/2019:07:46:53 +0000","client_ip":"","remote_addr":"172.21.0.1","remote_user":"","request":"GET / HTTP/1.1","status": "200","body_bytes_sent":"2246","request_time":"1.708","http_referrer":"","http_user_agent":"curl/7.38.0","request_id":"0dc58d378520834c41403bfb3f7f8aae","request_trace_id":""}

Gateway access

We are accessing to the Gateway proxy server which is what production would be like.

$ curl -i "http://localhost:8080/aut/" # Authentication

# $ curl -i "http://localhost:8080/aut/other"

# $ curl -i "http://localhost:8080/aut/other?a=1&b=2"

# Gateway Nginx logs

{"time_local":"06/Apr/2019:07:54:20 +0000","client_ip":"","remote_addr":"172.17.0.1","remote_user":"","request":"GET /aut/ HTTP/1.1","status": "200","body_bytes_sent":"2531","request_time":"0.111","http_referrer":"","http_user_agent":"curl/7.38.0","request_id":"7a3c97e09964d32de6f6e19871f7c39b"}

# Application Nginx log

{"time_local":"06/Apr/2019:07:54:20 +0000","client_ip":"172.17.0.1","remote_addr":"172.21.0.1","remote_user":"","request":"GET / HTTP/1.0","status": "200","body_bytes_sent":"2519","request_time":"0.107","http_referrer":"","http_user_agent":"curl/7.38.0","request_id":"28a2ec6d84029f38a19b36771132d666","request_trace_id":"7a3c97e09964d32de6f6e19871f7c39b"}

My docker setup is running on a Vagrant box so I am testing it by accessing it from within the host OS's browser via http://192.168.99.30:8080.

http://192.168.99.30:8080/aut/ # Authentication

# http://192.168.99.30:8080/aut/other

# http://192.168.99.30:8080/aut/other?a=1&b=2

# Gateway Nginx logs

{"time_local":"06/Apr/2019:07:57:40 +0000","client_ip":"","remote_addr":"192.168.99.1","remote_user":"","request":"GET /aut/ HTTP/1.1","status": "200","body_bytes_sent":"1438","request_time":"0.119","http_referrer":"","http_user_agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36","request_id":"14291e99a28b964c1bb06435c2cb6b04"}

# Application Nginx log

{"time_local":"06/Apr/2019:07:57:40 +0000","client_ip":"192.168.99.1","remote_addr":"172.21.0.1","remote_user":"","request":"GET / HTTP/1.0","status": "200","body_bytes_sent":"2906","request_time":"0.118","http_referrer":"","http_user_agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36","request_id":"286822194b9dcfb8958da91b8a0f7b35","request_trace_id":"14291e99a28b964c1bb06435c2cb6b04"}

In this example I opened up my host machine to the Internet with "ngrok" so I am testing it by accessing it from within the host OS's browser via http://b119fda7.ngrok.io which points to 192.168.99.30:8080. The logs below are the most production like ones.

http://b229fda7.ngrok.io/aut/ # Authentication

# http://b229fda7.ngrok.io/aut/other

# http://b229fda7.ngrok.io/aut/other?a=1&b=2

# Gateway Nginx logs

{"time_local":"06/Apr/2019:08:05:28 +0000","client_ip":"31.127.118.116","remote_addr":"192.168.99.1","remote_user":"","request":"GET /aut/ HTTP/1.1","status": "200","body_bytes_sent":"1476","request_time":"0.122","http_referrer":"","http_user_agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36","request_id":"c83354e6b40bc1ac1959e642bf892562"}

# Application Nginx log

{"time_local":"06/Apr/2019:08:05:28 +0000","client_ip":"31.127.118.116, 192.168.99.1","remote_addr":"172.21.0.1","remote_user":"","request":"GET / HTTP/1.0","status": "200","body_bytes_sent":"2970","request_time":"0.117","http_referrer":"","http_user_agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_12_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.121 Safari/537.36","request_id":"bce165ceae2ff2b3d58999b834416301","request_trace_id":"c83354e6b40bc1ac1959e642bf892562"}